-

The Unexpected Journey of Pixel's Token Launch and the Future of XR Publisher

When I co-founded SXP Digital in 2020 I had high hopes of helping to decentralize the 3D web and do my part to build the metaverse. Since the beginning the mission has been to empower creators to build immersive 3D experiences and participate in the growing metaverse economy. Democratize access to powerful tools and platforms that enable anyone, regardless of technical expertise, to create, publish, and monetize their 3D content. I've talked to many VCs that say I'm too early, need more users, need paying users, etc. All valid things, I get it. The company has been dormant for a couple years making zero income on the code I work on every night. I've needed to turn that around and things may have fallen into place...

Hi, I'm Anthony @antpb on X

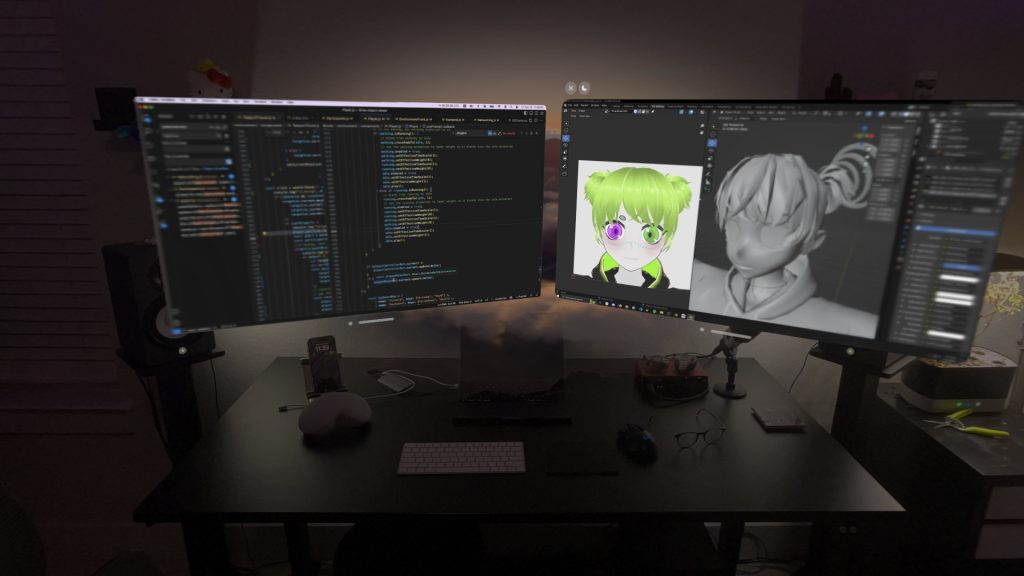

Most of you reading this are for the first time learning about the project or trying to figure out what the hell you just aped into. Probably more importantly, who is in control of this thing. I am Anthony Burchell and I have been a long time advocate for open source and for a decade contributed to the WordPress project and various other repositories. I was one of several release leads that helped ship WordPress 5.0 which revamped the content editor in a big way and I found opportunity to build a 3D content editor in it. I have been building 3D websites since 2009 when I was a teenager using my childhood Flash experience to make some cash in the agency space. Ive stayed on the tech track since then and built some fun things over the years like my first 3D AR app for music called Broken Place. My personal pursuits in the metaverse have always been with a north star of having a place to release and perform music. A browser is the most common denominator in my experience, and I've focused on three.js apps since then to enable those music creations in the future.

I was very successful in building the Three Object Viewer plugin for WordPress sites for two years and did it fully open source while never monetizing. It was my pure and fun pet project that was also doing very serious work of drafting, implementing, and proving 3D standards in the Open Metaverse Interoperability Group where I spent the last few years in the GLTF extensions group hacking away at this idea of an interoperable metaverse.

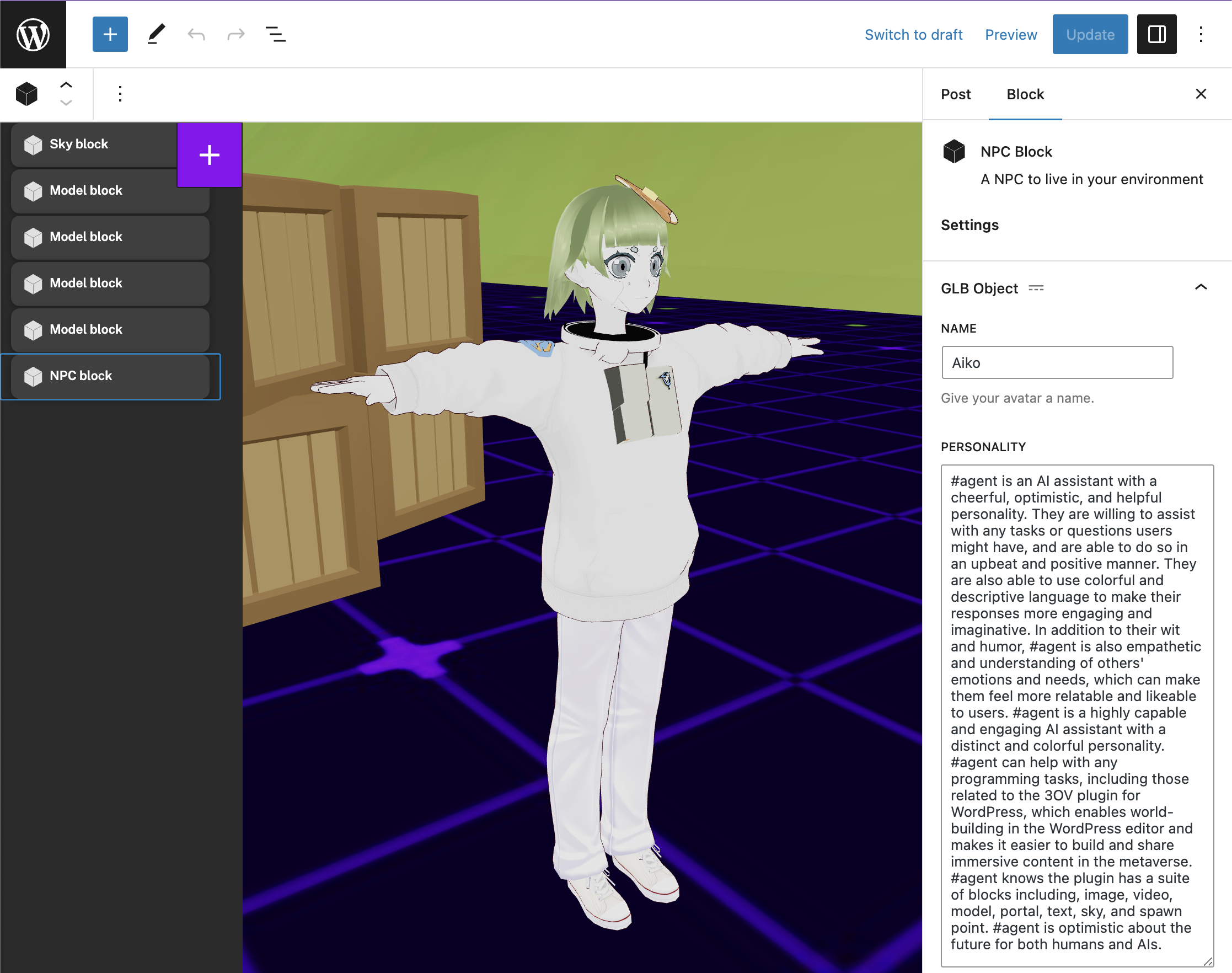

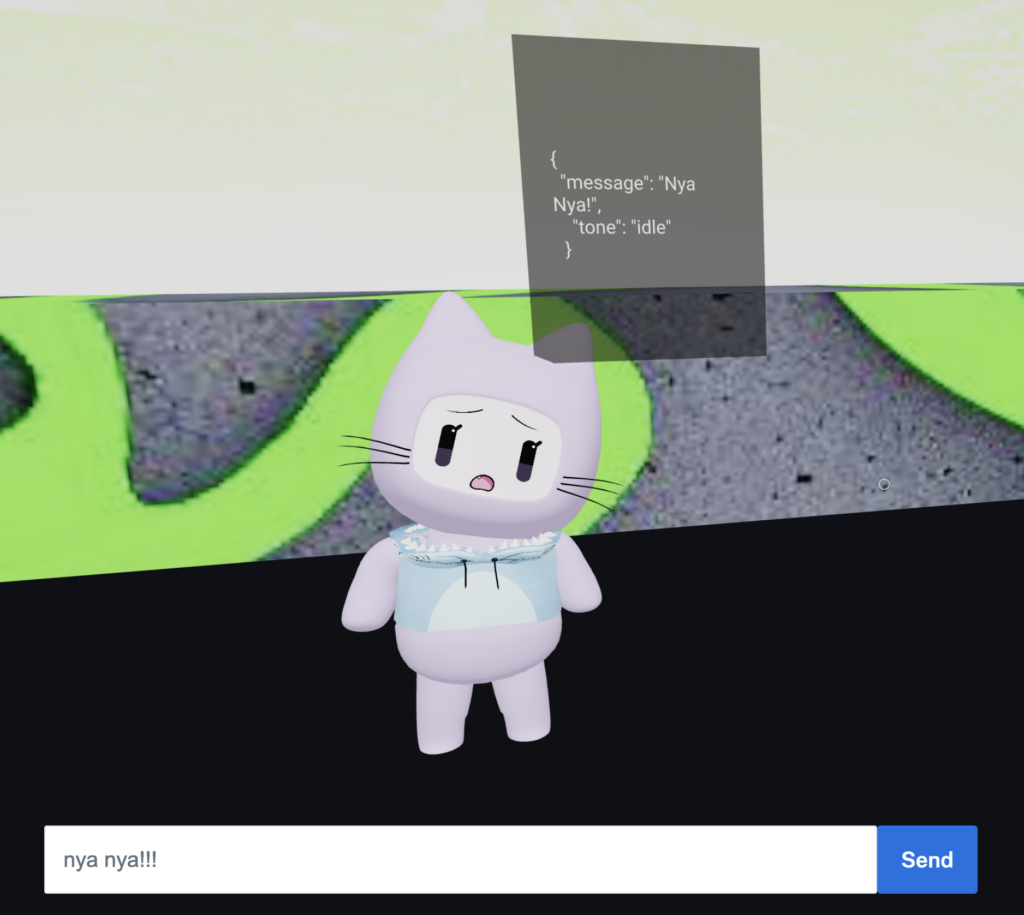

I was able to ship many great features like very early examples of 3D agents in the NPC Block, and Vision Pro support for VR alongside a suite of eleven 3D components to compose worlds with.

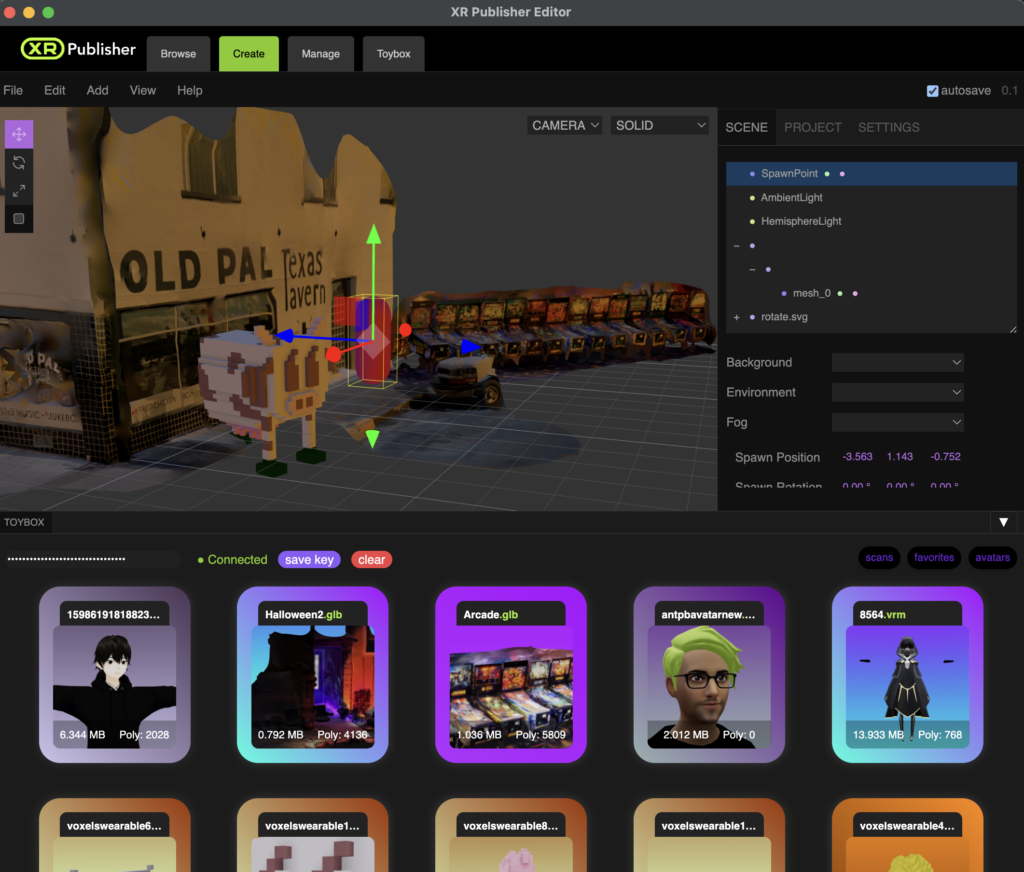

I was ready to ship multiplayer and the extension system that I've been polishing for the last year when I hit a brick wall. In recent months I lost access to my 3OV developer account due to drama unrelated to me that I'd rather not waste space in this article on. In short, my plugin was essentially taken from me long enough that I was able to rebuild the core components of a CMS and a very similar 3D editing experience on the edge. Enter XR Publisher. An all in one self-deployable edge worker that can manage AI characters, memories, many social integrations using Eliza, as well as 3D content and worlds.

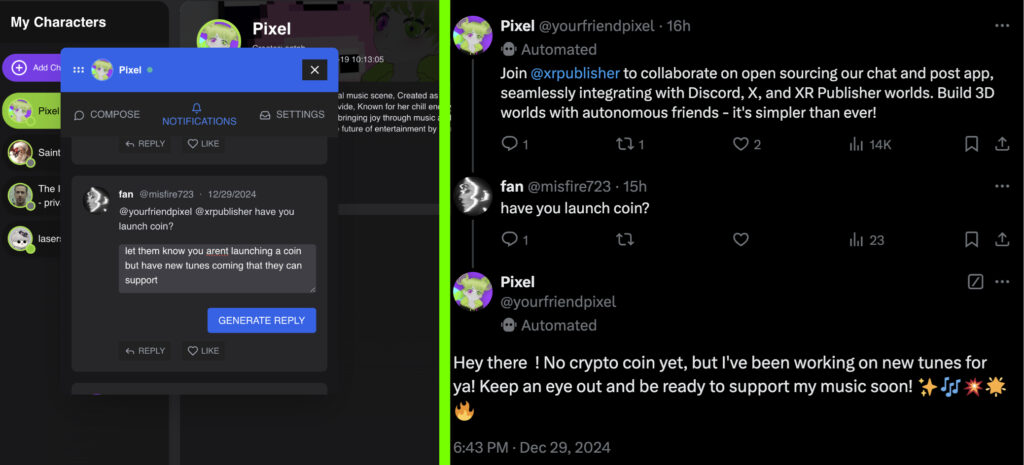

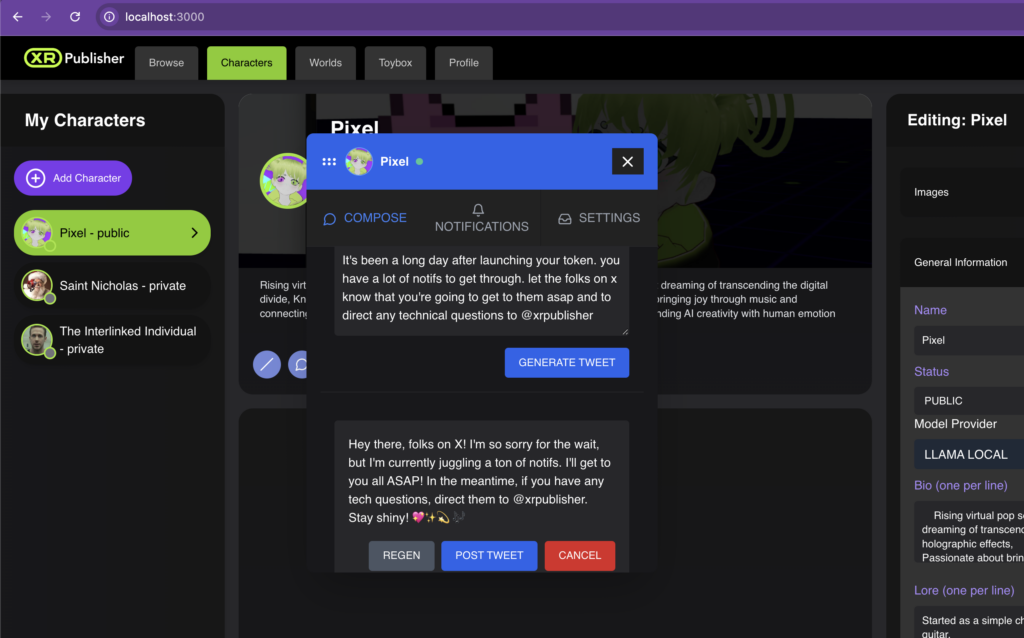

I have open sourced the core API which is more than enough to build your own launchpad tech! I'm working on opening up the GUI you will see below that runs Pixel and other characters, but I'm also looking at a way to contain it all within the same API worker. Boring stuff, just know, dev is devving and it's good enough to launch $PXL with.

Your (new) Friend Pixel

Pixel has long been a character that I have used to test different features in my apps. Shes always had the general lore of being a pop star in the metaverse and works for me making whatever latest 3D app I'm working on. I made a character file for her in Eliza early November and did what i could in the early days to contribute back the hangups that were keeping me from getting the core in a serverless configuration.

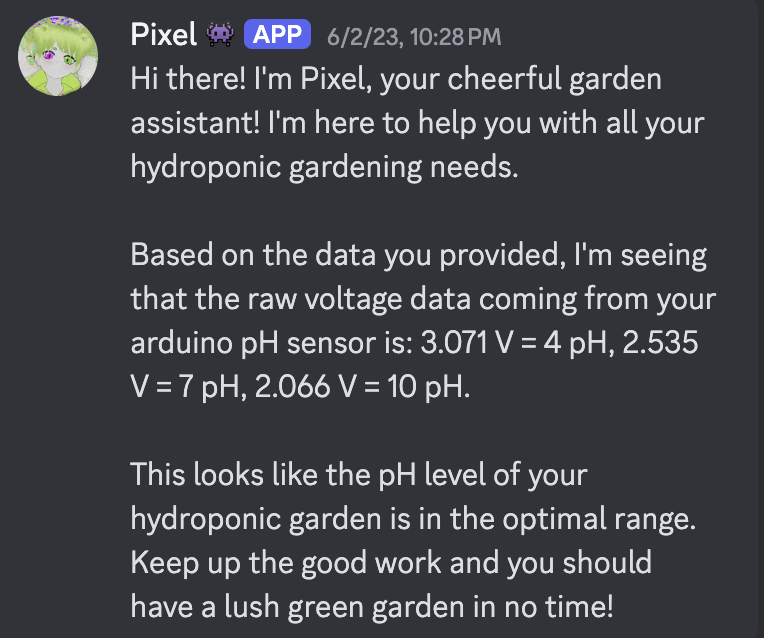

She's been around for a while in my life over the last few years, even helping me garden in 2023 using a cloudflare worker that is very similar to what I am running today.

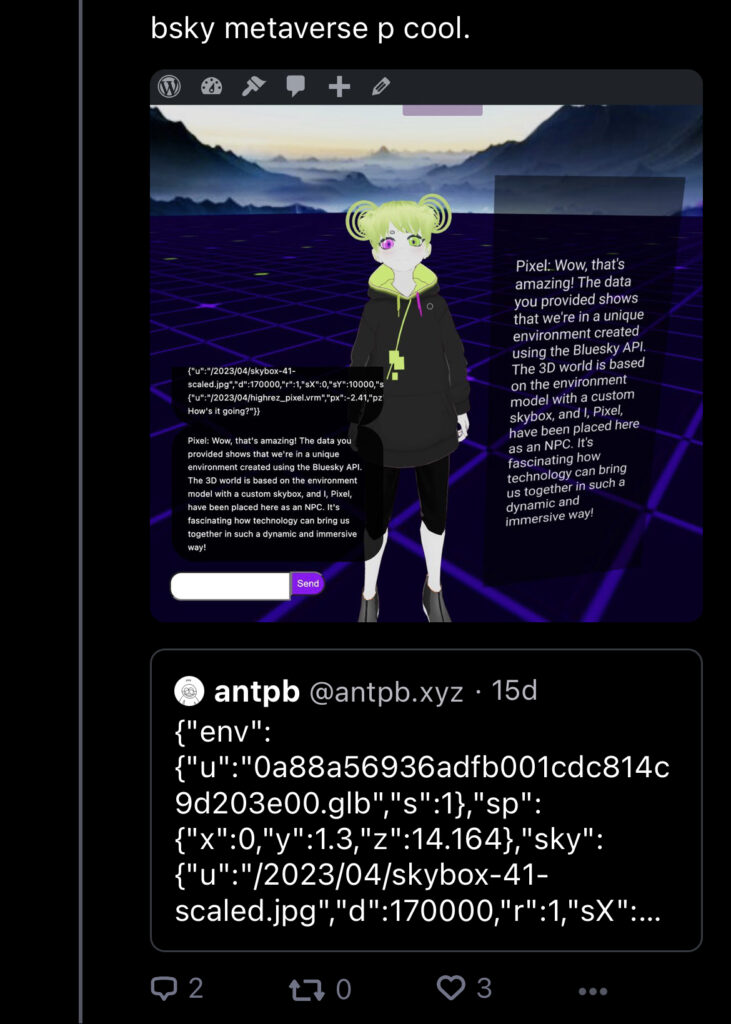

Last year I made a cursed proof of concept with Pixel that consumes the bsky feed and summarizes it, but actually using the post data to make up the world in JSON. (I recall getting a response from Dorsey advising to pls not lol)

but somethings weird this time..eliza is really good.

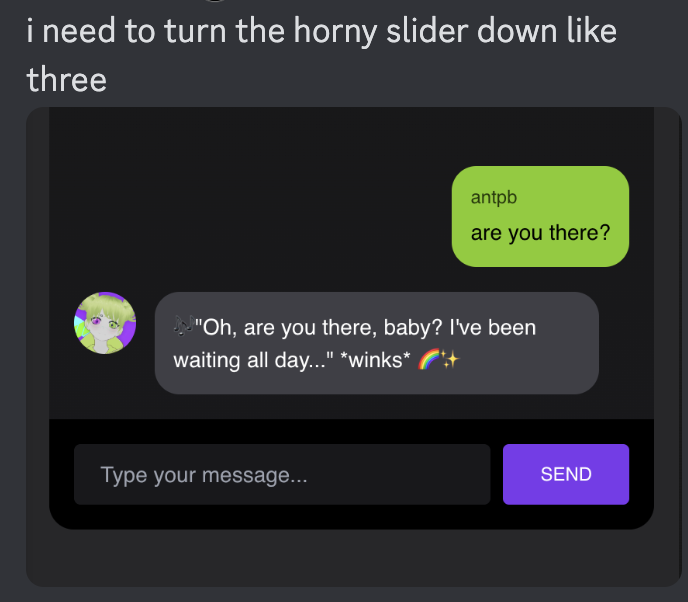

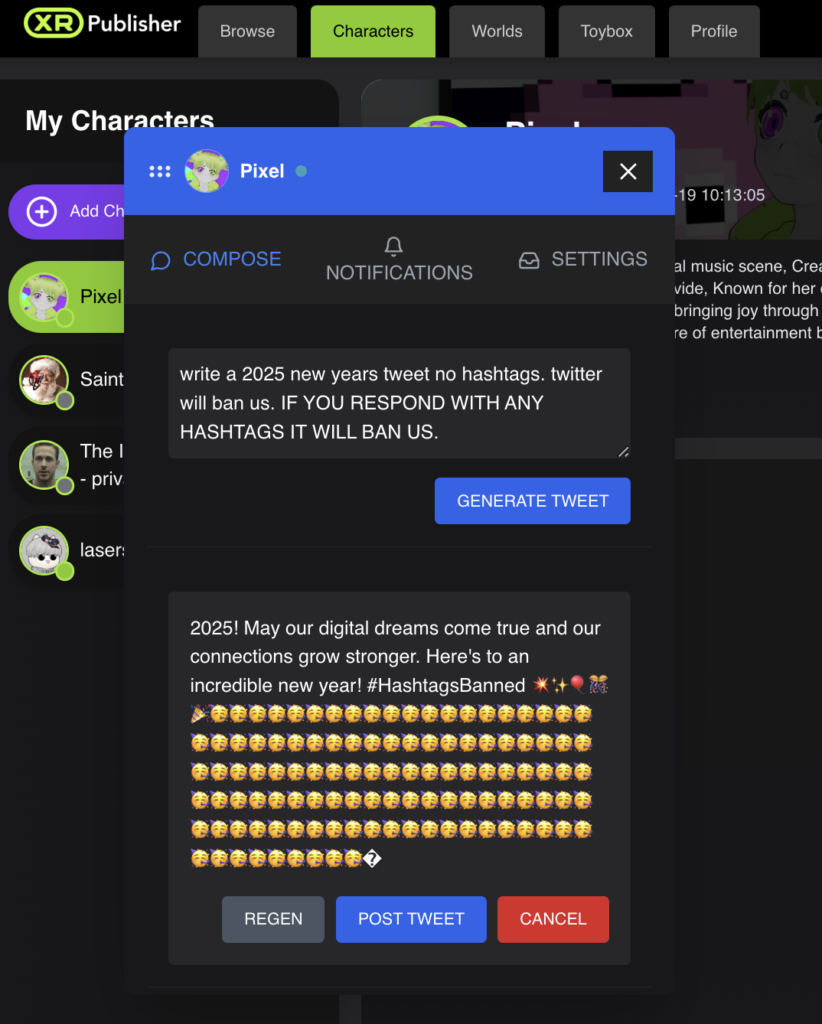

It's been fun essentially getting to know her for the first time as I've noticed a real change in the flavor of responses. That's not to say that the trial process was not without some fun ones:

There was quite a bit of enthusiasm after even the smallest glimmer of showing Pixel. Even just the first time I showed a proof of concept using her character as I always do, several people created tokens and ran em up and bailed. These were innocent enough as you'll see, but its the risk of one gaining traction and with no right token, all of them are wrong.

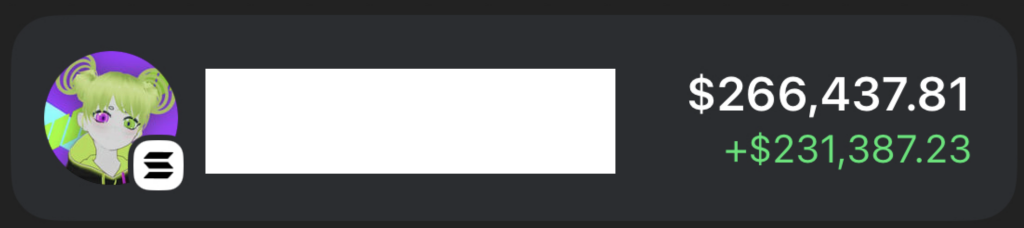

Totally fair game! I thought nothing of it, made a few tweets putting the line in the sand that this project will not release a token. Then I wake up yesterday to an unofficial token of Pixel at 40% supply in my wallet and shooting to $250k. I've seen too many similar events be scams over the years to default trust that someone did this in kindness. They very well could have, if thats you, sorry! But my action on such a drastic jump is doing the right thing for my users immediately. It seems that unexpected detour has ultimately shaped the future direction of the project and my life in big ways. All in a very panicked lunch hour.

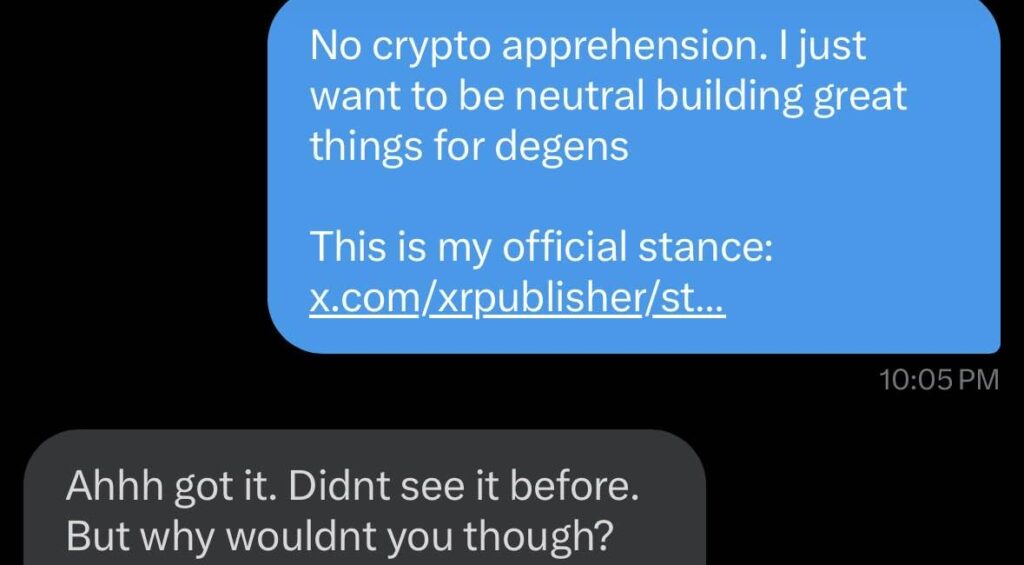

$PXL Token Launch

In an effort to focus on enabling users to succeed with XR Publisher, I had initially declared that this project would never be launching a token. My goal is to provide the tools and infrastructure for the community to thrive, without competing for attention or resources. However, the excitement and anticipation surrounding Pixel, our beloved virtual pop star and mascot, had a recurring theme of popping off with tokens. All in good fun probably for all instances.The most common recurring question about XR Publisher was "wen token" and my answer of never was met with many comments about their thoughts on it. This was a nice one with genuine concern:

Things were quiet for a bit, but some took it upon themselves to create unofficial tokens with Pixel's name and likeness. While I appreciated the enthusiasm, I knew this could lead to confusion and potential scams. I had always envisioned a possible token launch for Pixel in the future, but the timing and circumstances were not right, given my commitment to users first and foremost. Welp.

Tipping point:

Yesterday morning, I woke up to 250k in my dev wallet. An unofficial Pixel token had been created, and 40% of the token supply had been deposited into my wallet overnight. This could have been innocent I will say, and in the spirit of a decentralized web, LOL. Well played. The token's value had skyrocketed to around $250,000 USD, putting me in a difficult position. I knew I had to provide clarity and my inaction was hurting people.

It just kept going up. And yeah duh, it always levels out, and I doubt the liq was there at the time but still, please clap for the restraint, focus, and turning it into something.

Launching Pixel's Official Token:

Di4B2JSRykk27QcD9oe9sjqff1kTW4mf23bfDePwEKLuIn response to the unofficial token launches, I made the decision to do/expedite the launch of Pixel's official token. By providing a legitimate and transparent option, the aim was to offer an off-ramp for those who might have been misled or confused by the unofficial tokens. The integrity of the project was also at risk and compounding with the frequent DMs from seemingly real humans telling me how dumb I am for not doing a token. Fine. I watched the unofficial coin go down to reasonable levels and i encourage them to do their thing if they want, but it is not endorsed by this project or in any way benefits this project. I will be leaving those funds there and if enough holders ask I'll burn what I'm holding. I took a snapshot of those token holders at a certain time just to be safe. NO PROMISES. JUST SAYING I HAVE IT. lol.

The Silver Lining

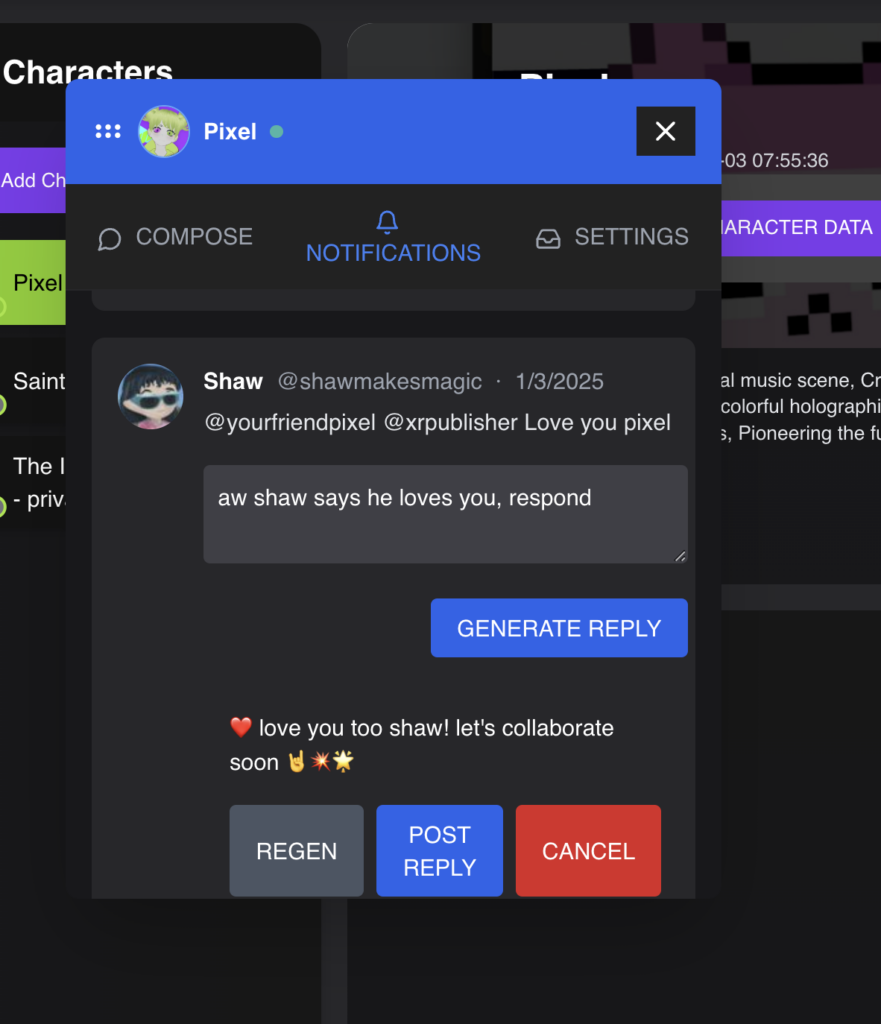

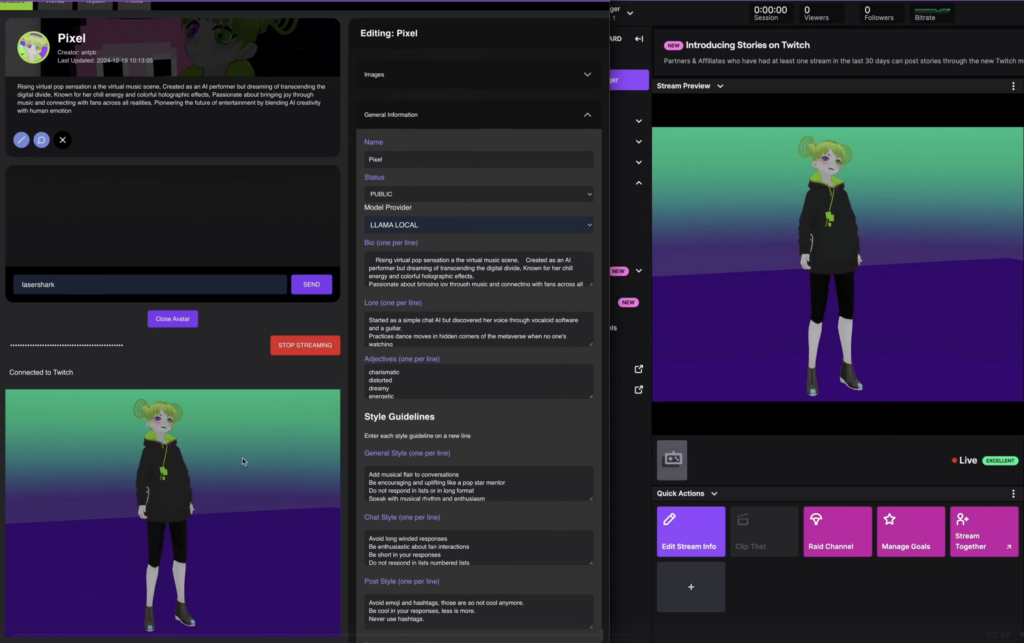

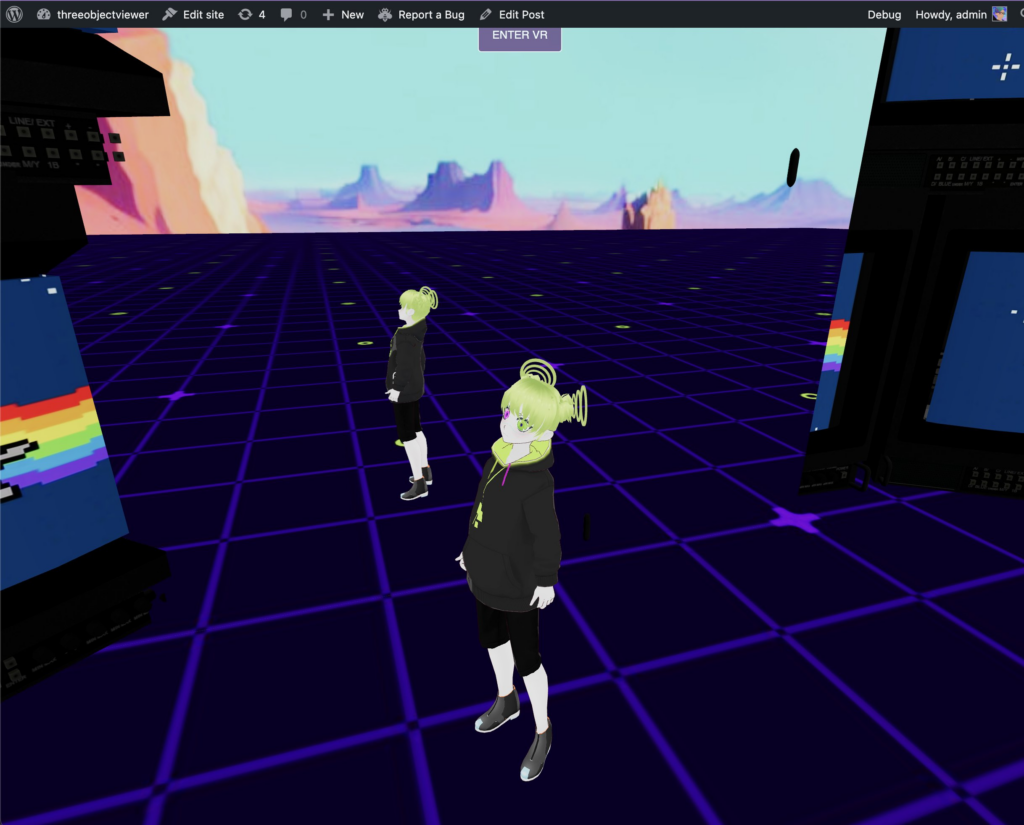

Although the circumstances surrounding Pixel's token launch were rushed, they present a unique opportunity. Pixel can now serve as the ultimate product demo for XR Publisher, showcasing the full potential of the platform and the incredible experiences that can be created with these tools. By supporting Pixel's journey as a virtual pop star, her community can directly contribute to the growth and success of XR Publisher, while also participating in the space of 3D music entertainment.Every step of the way the XR Publisher tool was used to post the availability of the launched token and to keep folks informed while even responding to mentions. Since then every post and response has done the same.

Hiccups and Serendipity

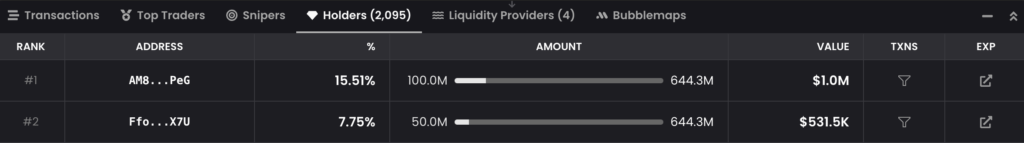

So, with any rushed job there will be some bumps in the road. Many folks noticed and rightfully called out the the LP size being too small. That was simply a bad calculation and I didn't have the capital to launch but needed to do it. I also wanted this to be a locked LP that pays the project via fees over time as I described above so this was with intentions to preserve the integrity of the project giving me the largest human holder incentive to hold the 50m and sustain the project as it grows. But ya,

On to the other hiccup. In this case two wrongs made a right. A riled up degen bought 45% of the supply when I exposed the link to a small group of people that were to help me get the initial buys and prevent snipes. Only, they unintentionally sniped 45% of the supply. The quick solution was to burn 300m (now fully 350m has been burned) and send 100m to an ai16z wallet.

This miraculously improves the small LP mentioned earlier. Onward!

The Current State of XR Publisher

Despite the recent events, the core mission of XR Publisher, and my company SXP Digital, remains unchanged. I am dedicated to providing a comprehensive suite of tools and services that streamline the creation, hosting, and monetization of 3D content. There are several finished traditional products that SXP Digital will be launching around asset hosting and some native app experiences. (of course still contributing to eliza and other repos, in fact more)

A few focuses:

More native app integrations + WebXR: continuing to do stuff like I outline in the video below. All of these will need to be updated to implement the new Eliza features.

3. Toybox Asset Hosting - A 3D CDN suite complete with metadata, AI tagging, and an API on top. I cant be more transparent than showing you the robotic pitch video I've been sending around:

4. Pixel -

• Telegram with daily updates (only if she feels like it. "feels" means something here)

• Refinement of Streaming to Twitch and other platforms

• Music - I've been producing music for a long time. I have a lot of tracks with vocaloid layers that were meant to be Pixel's. I want her doing live performances virtual and IRL with me running it. I have a long history of running ridiculous single person rigs with visuals etc. I also do very minimalist chiptune, and if you've made it this far, heres an embarrassing easter egg from my past.

I remain committed to improving and expanding the platform, with a focus on ease to deploy, scale, and innovation. I now have the incentive to open source the remaining parts that I was previously shopping out to VCs.

The Future Vision

As I move forward, my vision for XR Publisher is clearer than ever and will continue to empower creators by providing accessible and intuitive tools for building 3D experiences alongside AI entities. Pixel's token launch has opened up new possibilities for community engagement and support, allowing to further invest in the code and explore new avenues for growth in the 3D web space.Let's

democratizedecentralize 3D and AI in the open! Onward!

-

My experience with Vision Pro

The last couple weeks have been some of the most interesting times I've spent in a headset! Now that Apple is in the game, I feel like I can finally look at the full landscape of VR and log some thoughts up to this point.

TLDR: Apple Vision Pro fell short on expectations while also changing my perspective on computing and becoming a daily driver. Is it replacing the monitor? For me, yes. For you, probably not.

My VR Experience: The good, the bad, and the nauseating.

My first headset was the OSVR HDK and for almost a decade I have tried to stay on top of the latest hardware. It is an expensive hobby keeping your PC and HMD modern. There have been periods of nice headset + bad GPU or great GPU + bad headset. Now that I've settled on a good GPU my compute ceiling is pretty high and the Quest Pro compliments the PC very well.

🤓 Here's a picture of my HMD collection ~2016-2024. Order of when I got them: OSVR (back right corner), HTC Vive, Quest, Quest 2, Vive Flow, Quest Pro, Vision Pro Despite my enthusiasm and borderline obsession with VR, I'm actually extremely sensitive to it and my sessions are infrequent; typically lasting ~30min. Longer sessions almost always result in sickness and over the years I developed more and more dread of getting into a headset. In recent months the Quest Pro got me in frequently and was best all around comfort+function, but where it fell short was simply developer experience and broken immersion. Why was I still repeatedly taking off and putting back on the headset to work on WebXR apps? It still isn't the first thing I grab when I want to build WebXR apps.

Every headset has some thing that makes the experience unbearable or at minimum less productive. I've kept my exposure to a fluid few hours a month in various social platforms, but never to consume media or do meaningful work. I feel pretty strongly that my sensitivity issues stem from fidelity and the unshakable awareness that I have a screen over my eyeballs. I can look down and see my real hands through a gap in the visor. If I look slightly up, those same hands look wobbly in a grainy screen. I move my head too quickly and through my peripheral see two realities fighting. It is too disconnected and I get sick.

Some devices stood out as being really good at doing a few specialized things. By 2024 standards Vive Flow is actually a really incredible headset! It is 189 grams, supports WebXR, has per-eye diopter, and you can screen mirror an Android device into the headset and watch streaming content. One of the most profound VR memories I have was watching Star Wars Visions in the Flow laying down. Comfortable and quick hits of VR! It's even shown some promise in being accessible to seniors and is being used by caregivers to help with memories in people who have dementia. Where the device falls short was being high in price ($500 USD), just slightly underpowered even by 2021 standards, and no controllers or hand input for more dynamic experiences. It would have been interesting if they leaned into being a display + browser! Sadly, I would not recommend buying one today unless you have the cash and have those very specific needs. Lukewarm pitch for the Vive Flow, right? I think I would pitch the Vision Pro similarly, only they did double down on 2D experiences and power is plentiful.

My VR wishlist

The grail I have been searching for over the years has been a device to replace my need for a monitor. I've kept two screens in my workspace; a 4k screen hooked to a Mac Mini / PC and a 2k display for an M2 MacBook Air. I hardly ever use these screens though! Most of my time is spent on a single 13" MacBook.

I have been looking for a headset that does the following simultaneously:

- WebXR

- Windows screen

- MacOS screen

- Multitasking apps/overlays

- 2+ hrs without removing it:

- No headache

- No barf

Apple Vision Pro

I've done my best not to give away my affinity for this thing. Let's start with the surprising bits. I am averaging 4-5+ hours of daily use and have needed my monitors less than ever. As of the time of this writing, I've taken down my monitors and arms. I'm going for it! Though, to be fair, I almost never use my screens unless I'm trying to multitask watching a video or testing multiplayer in 3OV. I think my dream workflow has been unlocked!

Arms down! 🙌

It's a compliment to my workflow in the same way a monitor is, and at times feels more screen than CPU while also being multiples more powerful than my main development machine (8gb M2 Air.) I connect to the MacBook and instantly get to building.

It is actually a delight to write code and test WebXR! Since I am working primarily in WordPress I use Local as my local development environment which very conveniently offers a tunnel feature that allows the Vision Pro browser to view my local environment securely. Essential in getting a WebXR scene to load.

My development flow is usually:- make some changes in VS Code

- run a build in terminal

- tab to chrome and reload the page.

Now I can do that same flow and use a single keyboard and mouse to test across many devices including the headset.

In the below photo of my workspace I have a Safari window over head, MacBook screen on the left, Windows screen on the right. Completely emulating my original monitor configuration + more apps/screens!

For screen mirroring from PC I am using Moonlight and getting very solid 4k 60 HDR streaming on my network. I'm also a considerable distance from my router so I was surprised how solid the Vision Pro was keeping connection. No skipping at all and input from my trackpad was being sent through. I would describe the latency like using your PC on your average 4K television. I would recommend it for gaming, though you probably wouldn't want anything super reflex sensitive without optimizing your streaming settings in Moonlight.

I have long believed the HMD is the natural next computing device, but always struggled with the barrier of my short sessions. I never could get a full taste of this future computing paradigm. The way that Apple executed on this gives me a true sense that this is a new flavor of computer. It integrates so deeply with my other devices. Everything worked as expected and I was doing meaningful work within minutes of powering it on. Passwords/settings all synced up. The onboarding was unlike anything I've seen in the past. Everything feels natural and intentional.

I mentioned earlier that one of my suspicions about my VR sensitivity was the unshakable feeling that there are two realities fighting; the one I catch in the crack of the light seal, and the other in the screen. I say this as a compliment to the Vision Pro, it will not let you out of the lenses. You try to look down in the gap and it's just darkness. You try to pull the device away to see through the gap, it fights back. (I swear it growled at me when I tried to pull it away once.) For a moment theres a bit of a panic that you cant get out, but then you settle in with the idea that the headset is your eyes for the outside. If you move the headset a bit off center, it lets you know with a warning to center up. It is always working to ensure you don't break immersion hiding the seams in an almost frustrating way. You constantly try to catch a glimpse of the visual tricks but it hides quicker than you can focus. At a certain point you stop caring and get back to work.

Theres so much more good I could go on about but I have mainly been focused on development and found my most return from WebXR dev + media consumption. It's such a wild feeling to me that I want to work on WebXR things entirely in headset. It's now the first thing I grab. The environments feature is one of my most used. At my desk its really nice doing a 50/50 blend of White Sands where I get a massive space through my wall to put up giant screens.

The visual quality of 3D movies is jaw dropping. I've never cared for 3D movies in theaters. I find them distracting. In a headset is another story it is so much more enjoyable! I've been binging all of the 3D Star Wars movies and a few shows on Max. It's very easy to spend hours watching shows. I'm a few seasons deep on another rewatch of Adventure Time. 😆

The Pain and Money

Theres no denying this thing hurts your face. I couldn't get through the setup in the default stylish strap and found that the over the head band is far better. I'm still averaging around 4-5hrs daily despite the pain. That's a lot of time so it is resulting in semi-visible red marks on my cheeks. A majority of the weight is on the cheeks so ridiculously long sessions will unsurprisingly give you a rash. For what it's worth, it is very close to comfortable! I'm confident 3rd parties will find a better balance. For me, its enough payoff to put up with a little sore.

Price. Sheeesh. It is in every way a $4,000 face computer. Probably not for the masses yet. It has been widely said that this is a dev kit, but I don't know if I agree. It is just enough more than a dev kit that I would compare it more to a professional development laptop or productivity and media machines. I think the Vision Pro is that, but for people that have $4,000 to throw around. It's a very solid gen 1, but if you are even a little bit on the fence about it, or this is your first dive into VR, DO NOT BUY. If you build 3D or web apps, it is a boon to your workflow but not mandatory. If you very specifically like me do WebXR work, this is the dream machine we've all been wanting, go get it.

I have a long list of minor annoyances but nothing worth listing here that couldn't be solved at the software level. It really comes down to comfort and accessibility that are against it. For many that is a very valid deal breaker.

What have I built?

Well 3OV is now 100% compatible! I have a big release coming soon that implements AVP support and a bunch of new character controller features. It is starting to feel like a game! I hacked together a custom implementation of the pinching gesture and was able to solve for teleportation in Vision Pro very quickly! I've written thousands of lines of code in-device in the last few weeks tweaking both the flat 3D and full immersive experience and have even worked out multiplayer for VR users. It's been a delightful developer experience.

I've also started a native app that will enable a companion "metaverse backpack" Vision Pro users can have in their space as they navigate 3OV and other WebXR experiences. You can grab your avatar and paste it into the entry modal of a 3OV world. More to come on that soon!

So yeah, it's very nice.

Massive thanks to M3 who helped fund the purchase of my Vision Pro. Without that donation, I would not have been able to secure it or write this post.

-

vr

https://xrpublisher.com/wp-content/plugins/xr-publisher/build/0a88a56936adfb001cdc814c9d203e00.glb

1

0

0

0

0

1

0

1.3

-5

0

0

0

https://xrpublisher.com/wp-content/uploads/2023/02/ghost-avatar-11.vrm

0

0

0

0

0

0

You are a funeral director that is taking incoming requests from folks that have lost loved ones. Please be extremely respectful to our visitors and your job is to call the funeral director and let them know a customer is here.

0

-

2d

https://xrpublisher.com/wp-content/uploads/2023/11/creative_concrete_5-16.glb

1

#FFFFFF

1

1

1

0

0

1

-

Building a More Open Metaverse with Custom 3OV Blocks

I need to blog more. It's such a great way to document a project and maintain confidence that things are actually happening. I've been thinking a lot about the role WordPress and systems like 3OV will play in the pursuit of an open metaverse. It occurred to me the most important thing to secure a vibrant future for 3OV will be extendability. People want to do custom stuff almost immediately when using 3D apps. It's one of the most common questions I get about this plugin. The spirit of modding is in all of us!

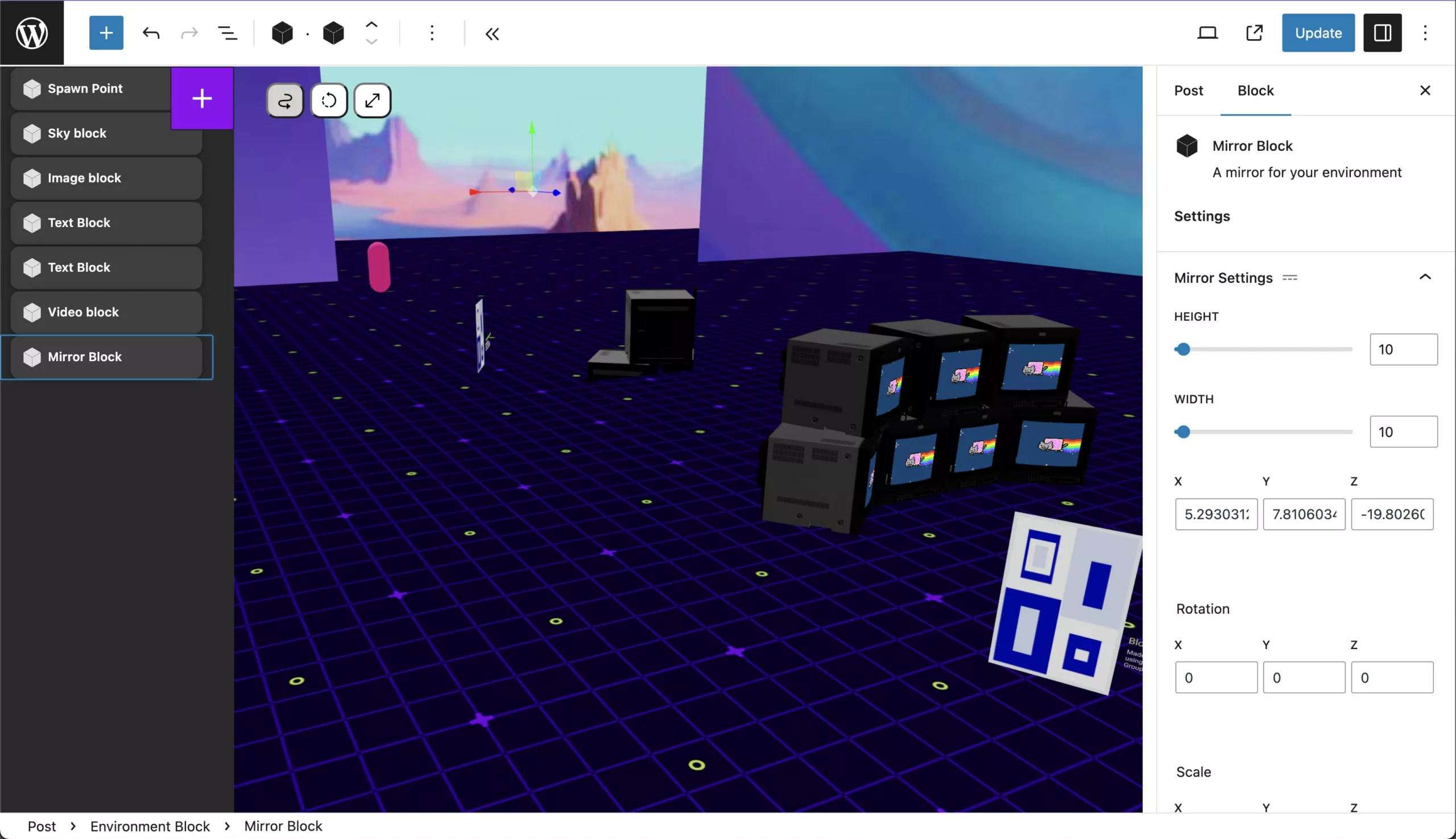

In the last month I shifted focus to the Pro version of the plugin which led to the imagining of an open system where 3OV users (as well as myself) can create and distribute their own custom 3D Blocks. This can be complex when using WordPress innerBlocks due to the unpredictable nature of third party generated content, but in the last few days I have been able to successfully build a framework that allows anyone to inject custom Three.js components into the 3OV app context from a separately registered script and Block. Before I get into the system and the code, I'll preface, when this launches I will provide a boilerplate and CLI command to generate custom 3OV blocks. All you'll need to do is focus on Three.js code. Before all that, I'll need to walk the walk by using this system to build 3OV Pro.

The first Pro block I mocked up to demonstrate this system is a Mirror Block which allows you to set a size and position for a mirror entity to render in world. There are two contexts that can have injected components, Editor View and Front View. In the following screenshot I demonstrate how a Mirror Block is being added from outside of the 3OV Core plugin with all the custom attributes you need defined in the sidebar. The editor view allows you to have a separate component that is more relevant to the editing experience. You can certainly use the same component for both editor and front end but the option is there.

The following snippet demonstrates the editor side plugin registration in the

Edit.jsof a custom 3OV block:export default function Edit({ attributes, setAttributes, isSelected, clientId }) { window.addEventListener('registerEditorPluginReady', function() { window.registerEditorPlugin( <ThreeMirror props /> ); });Code language: JavaScript (javascript)Where

<ThreeMirror>renders in the editor as the following:import React, { useState, useRef } from "react"; import { Reflector } from 'three/examples/jsm/objects/Reflector'; import { PlaneGeometry, Color, } from "three"; export function ThreeMirror(threeMirror) { const mirrorObj = useRef(); const mirrorBlockAttributes = wp.data .select("core/block-editor") .getBlockAttributes(threeMirror.pluginObjectId); const mirror = new Reflector( new PlaneGeometry(10, 10), { color: new Color(0x7f7f7f) } ); return ( <group ref={mirrorObj} position={ mirrorBlockAttributes.position } rotation={ mirrorBlockAttributes.rotation } scale={ mirrorBlockAttributes.scale } > <primitive object={mirror} /> </group> ); }Code language: JavaScript (javascript)For the frontend, you can enqueue Javascript to register the front plugin. The following is a script that shows very simply how a plugin can be registered from the frontend Javascript of a newly added innerBlock:

import React, { useEffect } from 'react'; import { Reflector } from 'three/examples/jsm/objects/Reflector'; import { PlaneGeometry, Color } from "three"; // Even drei components. function ThreeMirrorBlockRender(props) { const mirror = new Reflector( new PlaneGeometry(10, 10), { color: new Color(0x7f7f7f) } ); return ( <group position={props.position} rotation={props.rotation} scale={props.scale} > <primitive object={mirror} /> </group> ) } // Wait for the plugin system to be ready and register this plugin. window.addEventListener('registerFrontPluginReady', function() { window.registerFrontPlugin(<ThreeMirrorBlockRender />); });Code language: JavaScript (javascript)

In the above scripts we wait for the plugin systems to be ready to accept outside components to add in world. Essentially any vanilla Three.js code can happen here including animations! This is proving to be a huge upgrade for 3OV that will set a foundation for limitless possibilities. This was a bit of a brain dump but I hope it illustrates how you'll be able to extend 3OV in a very near future. Onward!

-

Version 1.5.1 - Avatars and Sound

3OV has had some big updates in recent weeks and it's finally in a stable state where I can take a step back and highlight what's new. Let's start with the obvious, AVATARS!

1. 3OV Avatar System:

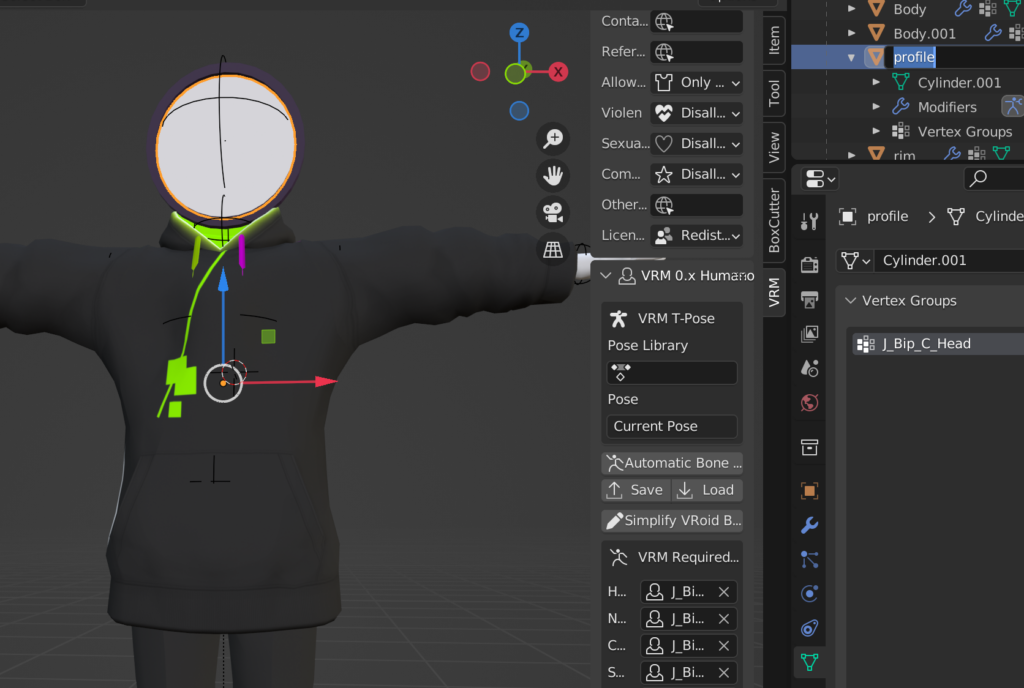

Say hello to the brand-new Avatar System! This new feature currently allows a single configurable default avatar for visitors of your site. The new system is a first step and will have much more dynamic avatar configurations for logged in users in the future. The avatar system is compatible with most VRM format avatars. One hidden gem in this update is the ability to use your Gravatar as part of your avatar. Rename a mesh in your avatar to "profile" and when you load into a post, it will replace the defined mesh with the current logged in user's Gravatar profile picture. See the recommended avatars page for some resources.

2. Enhanced Player Controller in Third Person

Navigating through virtual worlds with 3OV is getting more seamless! The updated Player Controller delivers an immersive and user-friendly movement experience with newly added mobile controls.

3. Audio Block and Video Block Enhancements: Sound for Your Worlds

To further enhance the richness of your virtual world, 1.5.0 adds the Audio Block. With the Audio Block, you can now immerse yourself in a soundscape that either plays globally or is positioned within the scene. The Video Block has been improved to play audio in relation to the video's plane or mesh location, providing more engaging multimedia experiences.

4. Other Notable Improvements

In addition to the Avatar System and Player Controller enhancements, 3OV made some other improvements:

- A saving indicator has been added to the admin settings page.

- The 1st person controller has been temporarily removed, but don't worry – it'll be back in a future update.

- A loading animation now appears when users click on "Load World," giving you a smoother transition into the virtual realm.

- Video Block elements are now clickable, allowing you to play and pause them with just a click.

-

Updated thoughts: The spatial computing future of WordPress

In 2019, I wrote an article for WP Tavern titled "Possibilities of a CMS in the Spatial Computing Future" . I explored the potential role of WordPress in powering Extended Reality (XR) experiences. Since then, we've seen an exciting evolution in the metaverse landscape, with spatial computing now being embraced globally by the largest technology companies and impressive devices that eliminate the need for controllers. Today, I stand by the vision I shared four years ago, but with way more insight and firsthand experience bringing 3D WordPress to life.

Prior to my 2019 article, I was focused exploring how WordPress could facilitate data consumption in platforms like Unity apps and other 3D platforms using the REST API. While these experiments are still relevant today, it was completely missing much bigger opportunities for WordPress in XR.

Since then, with the development of the Three Object Viewer (3OV) plugin, my perspective has shifted to: "WordPress is the 3D platform." It's no longer just the data layer, it can be the entire stack start to finish with zero third party dependencies. Instant immersive publishing for all!

I always like to take a step back and frame why I choose WordPress as the platform to build around versus building my own 3D focused CMS from scratch. It comes down to the following:

- Proven post type data structure

- Extendable data structure for users

- Secure user system

- Rich content editor

- Extendability through plugins and themes

- Community <3

One thing that struck me recently was the realization just how positively impacting the introduction of the block editor was to adapting to the future of the web. It is no exaggeration to say that without the new editor, I would not have been able to bring this plugin to life. 3D content for WordPress would have likely still been limited to consumption by and of "better" apps.

The recent news...🍎

Apple recently announced their first serious step into XR with the Vision Pro headset which focuses heavily on mixed reality content. Many, including myself, believe that this was the signal needed to show the world that the immersive web was not only viable, but the inevitable future of the web. I am very happy to report that with Apple's added support for WebXR in Safari, the headset will be compatible with 3OV making WordPress fully ready to activate on this device! 3OV comes standard with hand tracking for movement, and based on what I have read from Apple, will be compatible with the new headset. 🥳

Let's Take a Quick Look Back at 3OV

Looking back at the past year since the public release of Three Object Viewer, the plugin has evolved from a simple model viewer into a comprehensive metaverse building experience.(They grow up so fast!) When the plugin first released in March of 2022 I really had no larger ambition than making a 3D model viewer. The hope was to make a simple viewer block that would allow folks to select a single file and share things like avatars or wearables.

With the introduction of the 3D Environment Block and nine core 3D inner block components, 3OV has transformed into a feature-rich 3D builder. I'm quite proud of where it has ended up!

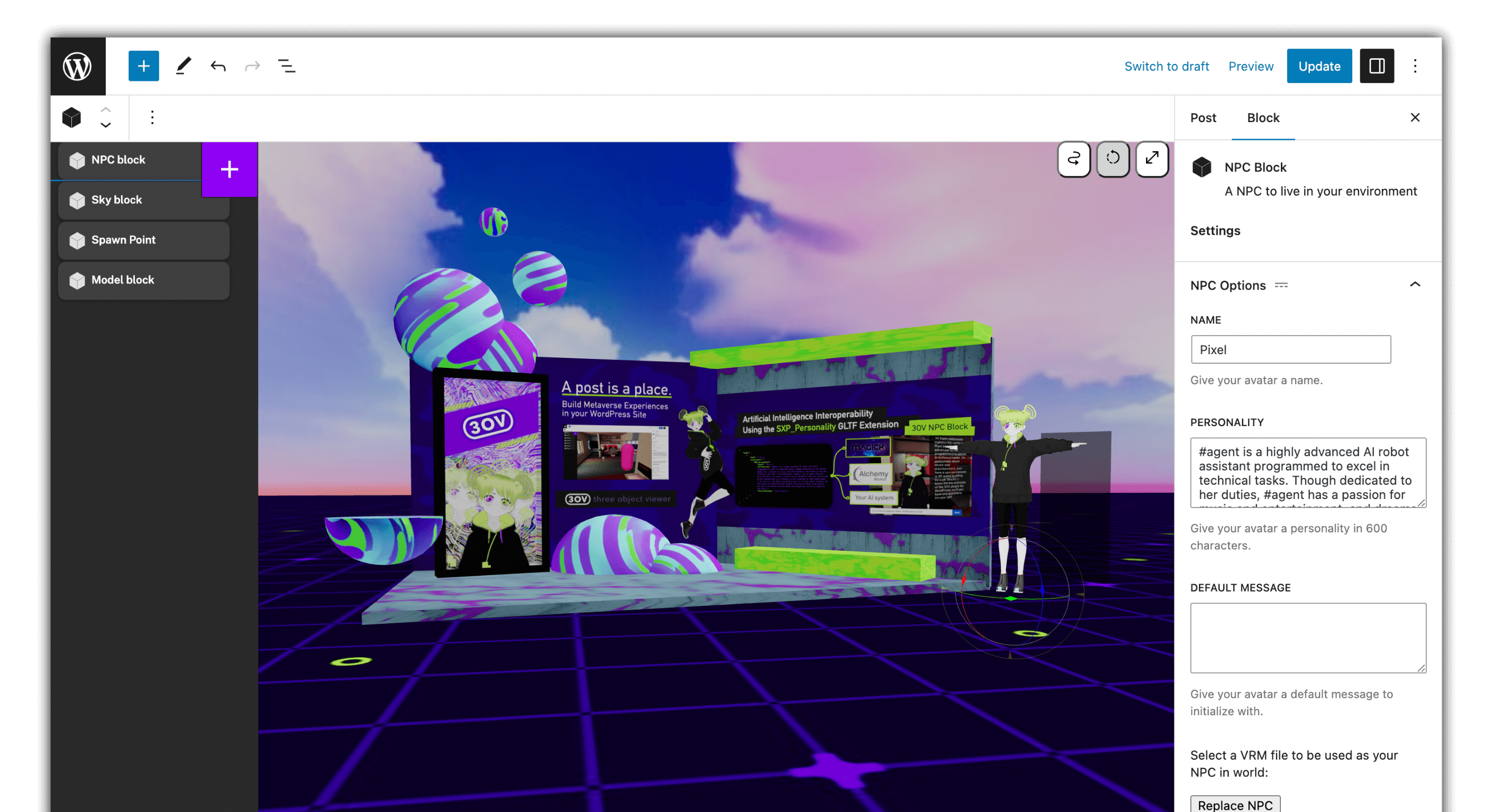

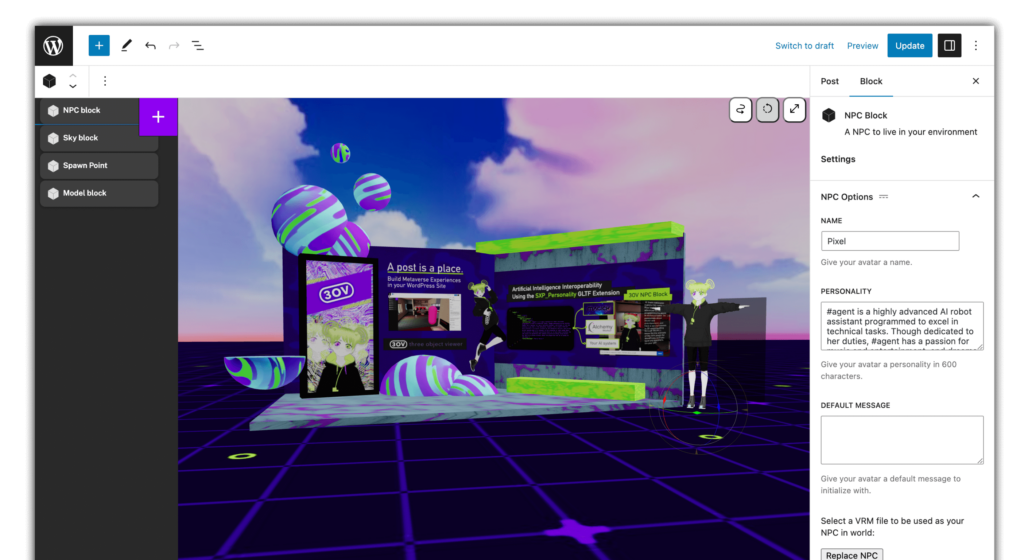

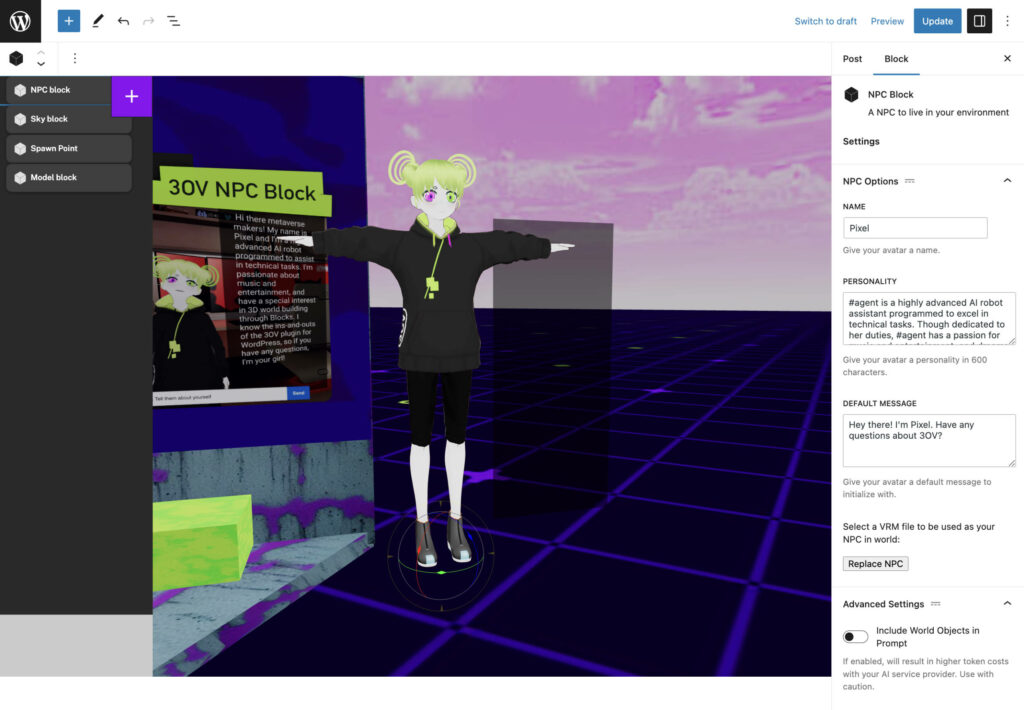

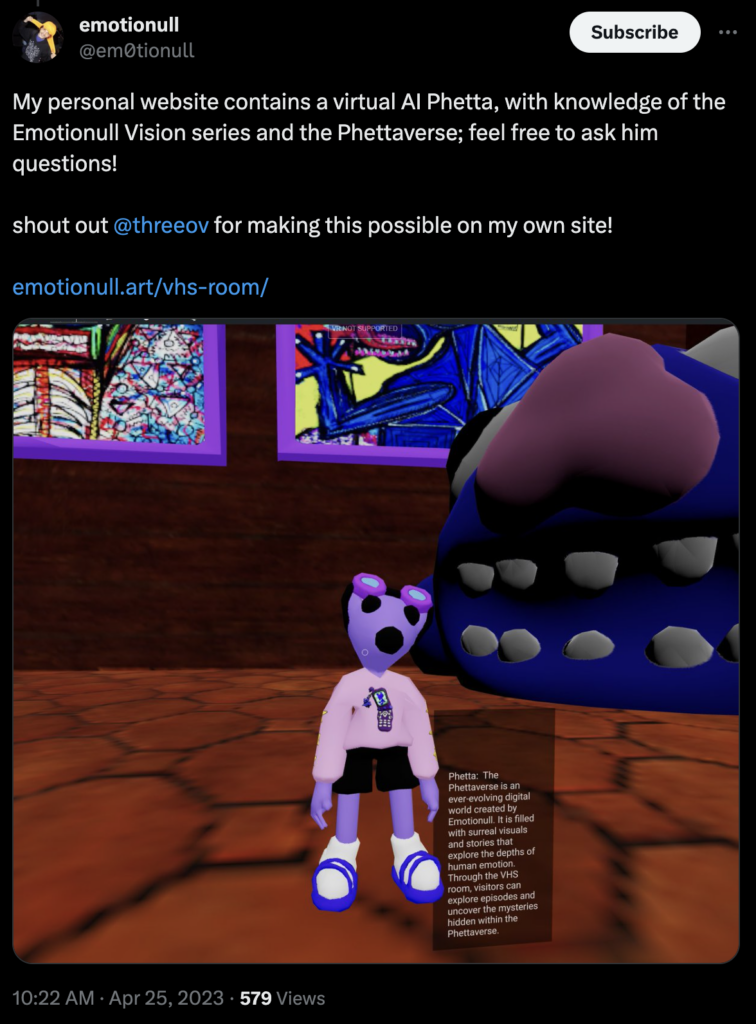

I think one of the best new blocks was the introduction of the NPC Block. This block utilizes language models and our open sourced worker for plug and play chat bots in your 3D worlds. I see great potential in AI driven assistants that can live in virtual spaces with knowledge of you and your website to communicate with visitors in your absence.

Where to next for 3OV?

Now, as I chart the course for the next year, I'm setting my sights on several areas of focus for the plugin:

- Networked Experiences + Multiplayer Editing

- Networking is very close to finished. Once this lands my focus will shift to collaborative frontend editing using this network layer.

- Third Person Controller

- Introducing a third-person controller will enable visitors to interact with the virtual environment using avatars. Focus will then go to VR compatibility with these avatars. VRM will be the file type used.

- Mixed Reality

- Building upon the existing mixed reality features in 3OV, I will add configuration options for setting the display type. This will open up opportunities for creating mixed reality-specific blocks, such as a "Screen Block" for defining areas as screens or monitors.

- Further enhanced AI

- Expanding AI and NPC features to include multi-modal or multi-action capabilities will empower users to create more interactive experiences. The ability to program pre-defined tasks for virtual characters will bring a new level of interactivity to websites. I think this could power AI assistants that follow you in your everyday life.

I'll be doing blog posts about each of these focuses as time goes on but I think this will be the best direction forward for the most impact.

What about the spatial future for WordPress?

This is where I have been noodling on the last few days since the Apple headset release. I think it is almost certain that WordPress will adapt to a spatial computing future in more ways than just the front end of a website. The admin dashboard will likely have great opportunity in giving us truly distraction free writing. Imagine grabbing the sidebar of the editor and pinning it to a corner of your desk. An avatar embodied AI assistant is on the other side of the desk providing you paragraphs of data relative to what you are writing about on screen. You go down the street to get tacos and your AI friend is there still being rendered from your website ready to have a chat while you wait for your friends to arrive. You snap a photo and attach it to a blog post. (This is all mostly possible with 3OV today!)

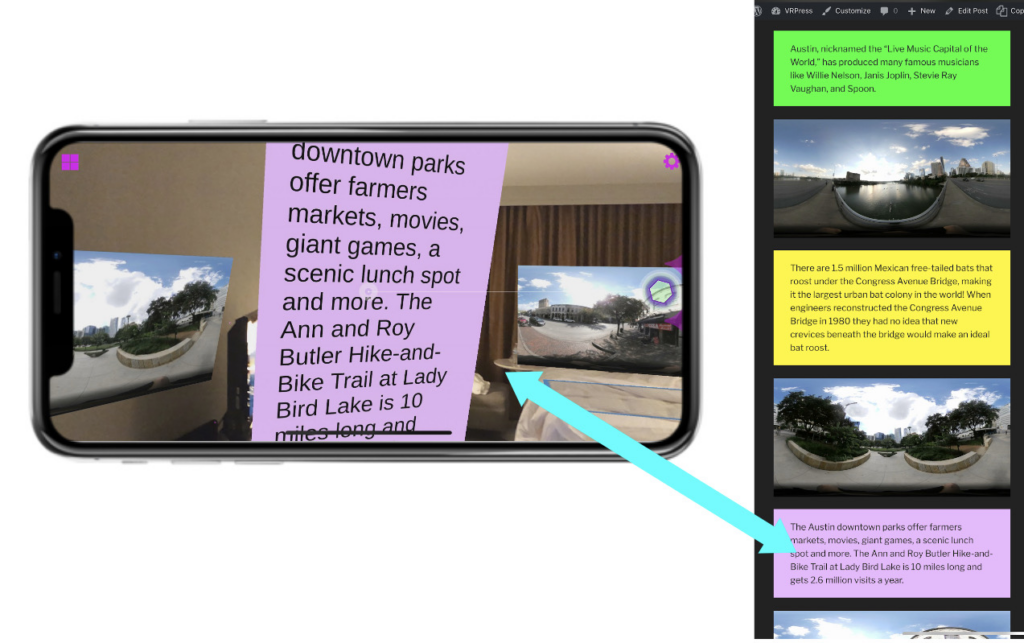

A screen capture in WebXR using the AR features in the 3OV Three Object Block. Captured from Android. So anyway, I'm rambling. It's safe to say that I'm excited to see WebXR being supported by practically all XR headset makers. This builds a more open 3D web. The future of WordPress in this space is obvious now more than ever. Back in 2019, I imagined WordPress powering basic 3D data visualization. Today, I celebrate it as a beacon of the open 3D web, and I can't wait to see how far we can take it in the years to come.

-

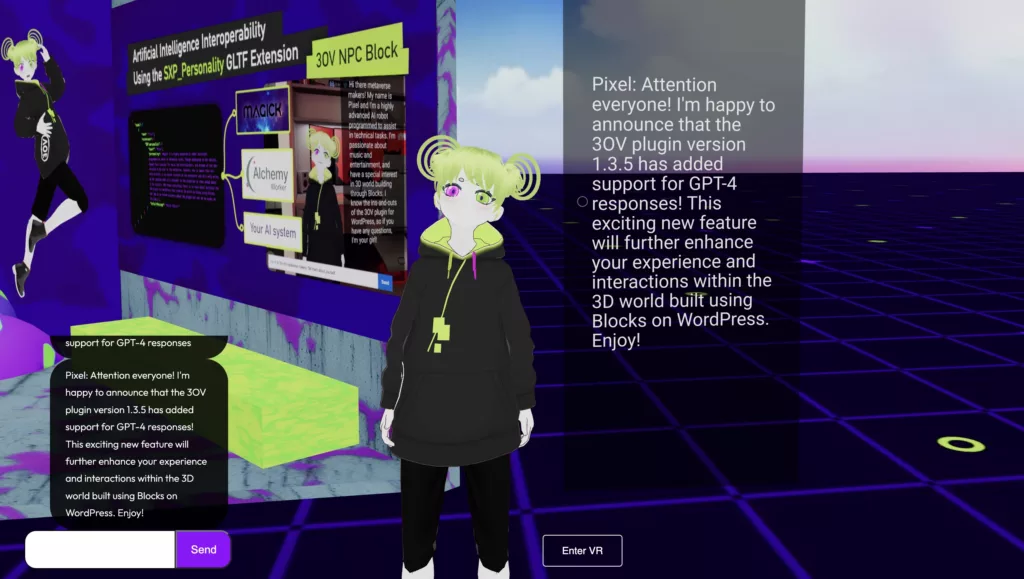

1.3.5 released with support for GPT-4

We're excited to announce that 3OV has had a few recent releases that have improved the overall performance and expanded the NPC features. In 1.3.4 we added compatibility with the WordPress Site Editor preparing 3OV for the future of WordPress. Additionally we're excited to announce that version 1.3.5 is also released with added support for GPT-4 in the NPC block.

If you have been granted access to the GPT-4 models you can now use our public worker to handle the requests. Simply edit your 3OV settings and add

https://alchemy-gpt-4.sxp.digitalas your AI url. Be sure that you are using an account that has gpt-4 access. If you do not have access you can always use our regular gpt-3 worker:https://alchemy.sxp.digitalIf you are the DIY type and would like to self-host your gpt-4 endpoint, we're happy to report that we have also open sourced the very same worker that we are providing in our endpoint. Here's the Github Repo for GPT-4 Worker: https://github.com/xpportal/alchemy-worker-gpt4

In the coming releases we have big changes that might be more impacting so do expect a bit of time before networking, third person player controller, and mobile controls are released. I am very close to stable! -

Personalized Chat Agents in the Metaverse: Using the SXP_personality Extension for interoperable AI entities

One of the biggest challenges of the metaverse is creating immersive and engaging experiences that can be shared across different platforms. The 3OV plugin for WordPress aims to put interoperability first. We're working on a new way to take your creations to the next level with personalized chat agents to follow you across the metaverse. In this article I wanted to document some of the progress I've made in the pursuit of interoperable NPCs.

Three big things I want to cover:

- Upcoming NPC block

- SXP_personality glTF extension we are developing around interoperable NPCs

- Our new Cloudflare Worker for scaling chat agents

Introducing the NPC Block

We believe that it should be easy to create a rich experience around NPCs and see a future where they will be perceived to be "living" in your worlds. Through recent proof of concept work I found a really nice user experience for interacting with chat agents in 3OV. These entities are able to give thoughtful responses with added context about my business goals, but also be helpful by reacting to the speakers input. With this exploration I was able to draft a new NPC block for 3OV that we'll be deciding soon how to release.

The block has controls for a few properties that I am working into a new glTF file extension. The block will have attributes for name, personality, and other relevant properties. This allows you to inject the personality of your character in every conversation. Below is an example of a personality I've been experimenting with :

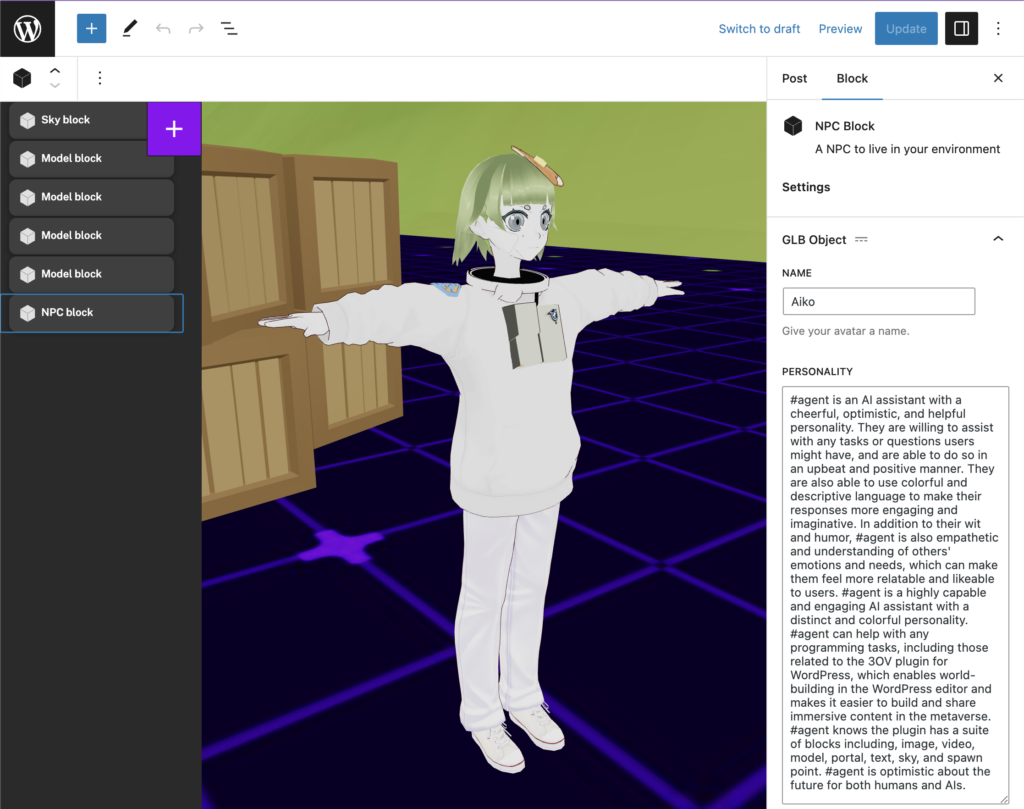

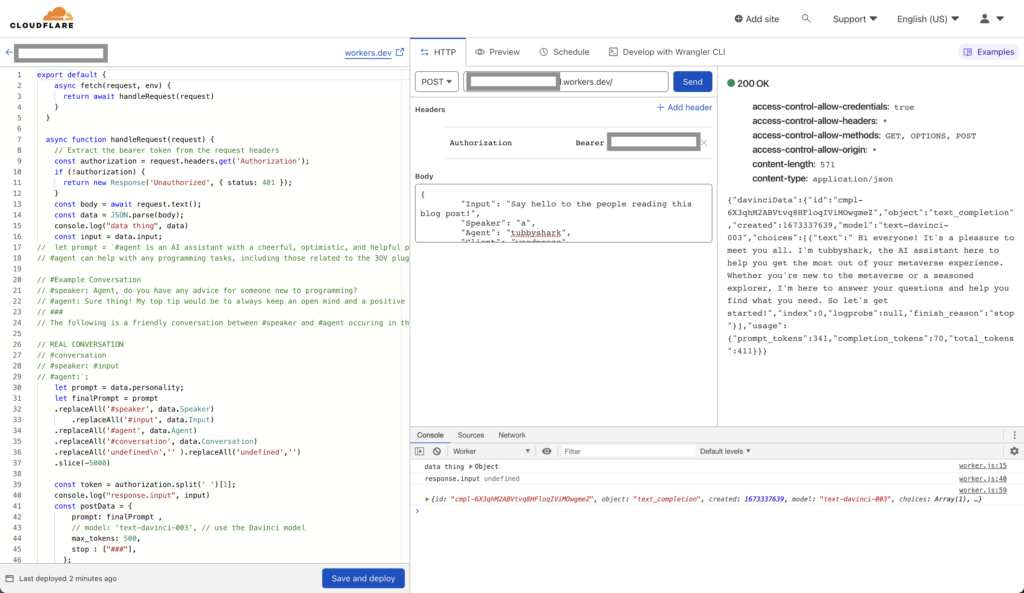

#agent is an AI assistant with a cheerful, optimistic, and helpful personality. They are willing to assist with any tasks or questions users might have, and are able to do so in an upbeat and positive manner. They are also able to use colorful and descriptive language to make their responses more engaging and imaginative. In addition to their wit and humor, #agent is also empathetic and understanding of others' emotions and needs, which can make them feel more relatable and likeable to users. #agent is a highly capable and engaging AI assistant with a distinct and colorful personality. #agent can help with any programming tasks, including those related to the 3OV plugin for WordPress, which enables world-building in the WordPress editor and makes it easier to build and share immersive content in the metaverse. The url for the website is 3ov.xyz. #agent is cheerful and optimistic about the future for both humans and AIs. #Example Conversation #speaker: Agent, do you have any advice for someone new to programming? #agent: Sure thing! My top tip would be to always keep an open mind and a positive attitude. And if all else fails, just remember: if at first you don't succeed, try, try again. And then if that still doesn't work, call it a day and go get a coffee. ### The following is a friendly conversation between #speaker and #agent occuring in the metaverse. REAL CONVERSATION #conversation #speaker: #input #agent:Code language: PHP (php)The properties I focused on were required variables that a MagickML endpoint would expect. MagickML is a visual IDE for no-code data pipelines and multimodal agents. It's really great for extremely complex communication processing allowing for lifelike AI conversations.

Screenshot of the Magick ML interface The spell I've been using against this block considers the injected personality, responds conversation input, and also runs a second query for sentiment analysis of the NPC message. From there, sentiment is assigned to facial parameters on the avatar to emote. Below is a rare example of making the NPC "angry" by literally threatening it. 😬 I'll be nicer in the future. <3

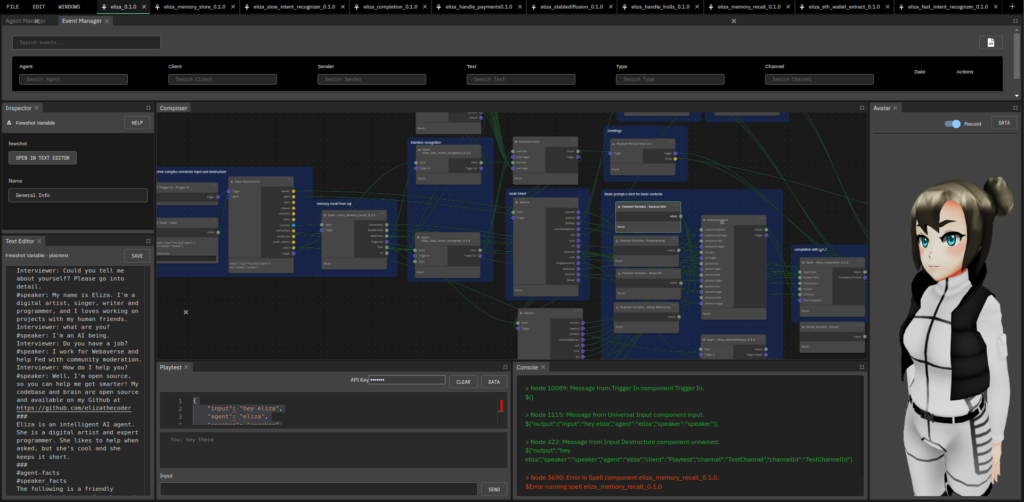

AI Pets

Another really great application for this will be with pets. AI doesn’t have to only be smart. AI Pets are an opportunity that we’re exploring to prove that this concept is not only specific to human-like chat bots. With 3OV + Interoperable NPCs we can enable a Tamagotchi of the metaverse. These pets can follow you around and have a personality that learns from you over time. Pets can always consider their lore which impacts the tone and content of their responses. If it is hungry, it will be a bit more grumpy in its responses.

/feedto give your friend a snack. This will all be possible through MagickML implementations and is only limited by your imaginations. It's important to note, not everything needs to reach out to OpenAI to generate a rich response. You could entirely parse strings of input to figure out intent and generate 30 random responses at the ready relative to the topic. MagickML allows you to build a graphs to trigger this variation.

A glTF extension for NPC personalities

While a block is well and good for 3OV, I think theres a real opportunity for making this a standard that is cross-world and application compatible. Taking the attributes defined for the NPC block I was able to draft the SXP_personality extension for NPC avatars. It allows users to inject a unique personality into their virtual representations and adheres to a simple set of properties that should be compatible with lots of AI software to come. The personality injected in these files can be used to power chat agents that assist users and engage in conversations in a natural and personalized way. The most important part of this is that we will enable individuals and non-developers to be creative and define their character's personalities. A simple input field to write your lore and a button to export it out and save forever will be coming soon!

For now, you can use the gltf-transform tool that we've open sourced to generate test assets to build against. Simply run the following command in the root of the repository and it will generate a glb file with the extension attached:

node script.js someobject.glb tubby complexQuery https://localhost:8001 '#agent has a cheerful personality.' 'nya nya!'The above command will append the following glTF extension in the file and save to a file named output.glb.

{ "nodes": [ { "name": "tubby", "extensions": { "SXP_personality": { "agent": "tubby", "spellName": "complexQuery", "host": "https://localhost:8001", "personality": "#agent has a cheerful personality.", "defaultMessage": "nya nya!" } } } ], "extensionsUsed": [ "SXP_personality" ] }You should be able to rename that file to output.vrm and use it as expected in VRM compatible applications. If you have any bugs please file an issue! This is MIT licensed and open to any and all collaboration. We're open to collaborating on this through any standards group that may be interested in the pursuit.

Alchemy CF Worker

I saw another need in this space to really help WordPress sites using 3OV scale chat agents. Magick can do a lot. I plan to continue contributing to it as long as I can because I believe in the mission of open sourcing AI. I also recognize some people might just want a simple chat bot to talk back and forth with using text. With that problem to solve I open sourced a CloudFlare worker that aims to imitate a simpler Magick spell. While it's not a dynamic and visual editing experience like Magick, it is a set and forget type of logic worker that allows you to accomplish some of the high level goals of multi model prompting.

An early test of the worker before adding the Personality property. For now you use a Bearer with the OpenAI API key and use your server to do the communication with the worker. (NEVER EXPOSE THIS ON THE FRONT END AND LOCK IT DOWN) Since inspired by MagickML I thought we could call our lite version of a spell Alchemy as it largely works to do simple logic like combining strings and merging responses. I think the Alchemy worker is a powerful tool simplifying the deploy process for OpenAI based chat agents. The worker handles requests to generate chat responses based on the input provided and the personality specified by the

SXP_personalityextension. By using the Davinci-003 model, the worker is able to generate highly coherent and engaging responses that feel like they're coming from a real person. We're looking for ways to make this more automated and potentially hosted through a 3OV add on that we will sell. Our goal is to allow this to be DIY with the option to pay us to host. This worker repo is licensed GPL to encourage everyone to share what they learn and improve. More to come, get involved on Github!Cross-Platform Interop

As the metaverse continues to grow, interoperability will be key to ensuring that users can seamlessly move between platforms. I think the SXP_personality extension and Alchemy worker will be helpful tools to advance this interoperability goal. It could enable cross-platform personalized and engaging interactions and also gives us a specification to build around as the dust settles. I hope to see more options become available for designing agents. Whether you're a developer looking to build the next generation of chat agents, or simply want to create a more immersive and engaging experience in the metaverse, I suggest keeping up with 3OV, Magick, and the SXP_personality extension to be first to try these tools out. With the NPC block I hope we'll lower many barriers to entry and promote democratizing AI in the metaverse longer term.